Index

Introduction

Learning data is data generated by students, faculty, and/or staff that relates and documents the teaching and learning experience and academic achievement. It can be used alone or combined with the student record and other data points to support student success research. Its purpose is to develop a quantifiable framework to infer an understanding of how people learn that informs effective pedagogies and interventions also subject to further analysis. For example:

- In class, faculty may use this data for proactive intervention with a student, or a student may use the data to alter learning behavior or activities.

- The instructional design may draw on the data to better understand course activities and use it to inform and modify course design, course structure, and assessments to improve learning.

- Institutions may leverage the data and its analysis to inform policies in order to improve outcomes, recruitment, retention, and matriculation.

Common Places Where Learning Data Lives

Learning data can be generated by many applications and systems, some of which are obvious while others are not. When most people think about where to find learning data they look to the Learning Management System (LMS). These centralized learning management tools capture a wealth of learning data that can provide insights into what a student accesses most frequently within a course shell, how much time they spend in the shell, and LMS-based assessment data. However, in the multi-system environment of institution's edtech ecosystem, learning data is also generated by the learning management system and captured by third-party systems that may be accessed from within the LMS but are outside of the LMS environment. These can include digital course materials and reading systems, video and lecture capture, polling software, and even online tutorials, such as Lynda.com. Basically, learning data can live in anything a learner uses and where their access and use of the tools is trackable.

For many institutions learning data moves beyond learning tools and can also be found in other systems, such as aspects of the student information system (SIS), and encompass items such as transcripts, majors, and course loads. In some cases, an institution may define elements of the student record such as a student’s PII, their high school GPA, and other data generated prior to entering college. These are gray areas since they can be included in learning data analysis profiles and be valuable when predicting a student’s success or matriculation timeline. It is important to note that these are considered by most to be static data points and may not influence the real-time needs for intervention.

Other Locations for Learning Data

- Some learning data may not be found in centralized systems and may be found in departments, such as study group sign-in sheets, and provide insight into academic success behaviors.

- Student academic support services, e.g. tutoring trackers, some library services, and training.

- There may be additional locations where learning data exists on campus, such as in the SIS, admissions applications, high school and transfer transcripts, etc. Whether these locations house learning data or other types of data is a gray area. It is essential that your institution provides a differentiation between learning data and non-learning data. This helps to shape other key decisions, including IT architecture data collections, record store, etc.

Common Uses and Practices with Learning Data (by role)

Learning data is collected and analyzed for a wide range of audiences and reasons by an institution and/or edtech provider. Learning data and analytics can be directed to the student, which allows them to reflect on their achievements and learning behaviors to help make them successful and plan/recommend support interventions. Learning data can also be used as a predictor, to inform teaching practices and pedagogy. Institutionally, this data can inform administrator decisions for marketing and recruitment, as well as accreditation on multiple levels. Examples include:

- Intervention and Academic Early Warning Systems where faculty, students, or advisors can quickly see a student’s trajectory through a course and can be alerted when a student is predicted to do poorly. This drives early interventions.

- University of Michigan’s Student Explorer designed for advisers to proactively identify their advisees that are at-risk for failing a course

- Purdue University’s Scope

Strategic Assets that You Need to Care About

- Data governance and principles for collecting, analyzing, and managing learning data (this may encompass concerns beyond IRB guidelines) Resource: 1EdTech Learning Data & Analytics Key Principles

- Interoperability of technologies

- Access to data policies and procedures for being granted access to use the data

- Architecture of a learning data-friendly environment; data science

- Change management and socialization for why learning data for faculty, staff, and students

What is Caliper Analytics?

A growing number of learning interactions take place online, which allows for data and information specific to the learning activity to be captured. This data promises to provide new insights into how particular learning interactions relate to learning outcomes. Using this data, educators can:

- Answer those seeking more accountability with measures of learning activity in addition to learning outcomes

- See which behaviors and content consistently produce the desired learning outcomes

- Compare the effectiveness of different content or interaction types

- Arm early warning systems and establish predictive measures

- Personalize curriculum in real time based on student patterns

The potential of learning analytics to innovate and shape education are found in the widespread collection and display of the data by online learning environments and other learner activity data collected by many institutions. All efforts to date have been built around proprietary standards that reinforce the silos often found in education. This makes it nearly impossible for the educator, student or institution to see a truly holistic view of what is happening in the teaching and learning environment. Not only does each organization need to reinvent the analytics wheel, but the current analytic conditions means the resulting analytics cannot be compared effectively. Each organization counts different things in different ways.

Since many curriculums ask students to work in multiple learning environments, there is a widespread need for data, that can be consolidated for a single view or cross-provider analysis.

The Caliper Framework will:

- Establish a means for consistently capturing and presenting measures of learning activity, which will enable more efficient development of learning analytics features in learning environments.

- Define a common language for labeling learning data, which will set the stage for an ecosystem of higher-order applications of learning analytics.

- Provide a standard way of measuring learning activities and effectiveness, which will enable designers and providers of curriculum to measure, compare and improve quality.

- Leverage data science methods, standards, and technologies.

- Build upon existing 1EdTech standards.

- Provide best practice recommendations for transport mechanisms.

What are Caliper Metric Profiles and What Do They Do?

Metric profiles provide a common language for describing student activity. By establishing a set of common labels for learning activity data, the metric profiles greatly simplify the exchange of this data across multiple platforms. While metric profiles provide a standard, they do not in and of themselves provide a product or specify how to provide a product. Many different products can be created using the same labels established by the standard. It is essential that an institution using Caliper ensure that the tool consumer and tool provider support the metric profile(s) for the data they want to collect.

Items to be Aware of for Institutions Wanting to Leverage Caliper:

- Need for wider adoption by vendors.

- Knowledge and understanding of how to take advantage of it by IT and its value to administrators.

- Understanding what is needed on campus and between the vendor and the institution.

- Developing an implementable plan (don’t take on too much at first).

- Understanding that this is an evolving process.

- Understanding on how to make the data being leveraged actionable.

- Easily identifiable on-ramp. A learning analytics initiative does not need to be complicated. It is about using data to answer questions. Consider:

- The problem that you want to address on your campus

- The kind of data that is available to you now

- How you plan to use this data

- Evidence of how it solves a specific problem.

- Existing “data toolboxes” among current data users and not wanting to change.

Learning Data Collection Architecture Basics

- Common Terms and Acronyms

- SIS (Student Information System)

- LRS (Learning Record Store)

- Data Lake - Area to store data in its most natural state—largely unchanged from its source system.

- ETL (Extract, Transform, Load) - Describes a process of moving data from one system to another. ETL can include anything from “slogging flat files” to real-time message queuing systems.

- API (Application Programming Interface) - A set of protocols and/or techniques for describing how program components interact.

- Message Queueing Systems

- Amazon SQS

- Rabbit MQ

- Apache ActiveMQ

- WebHooks - Event driven data exchange using standard HTTP practices (e.g., POST)

- LTI - Learning Tools Interoperability® (LTI®)

- Who Needs to be Involved in the Planning?

- Enterprise architects

- SIS/DB administrators

- Technical integration team

- LMS (or other EdTech platforms) administrator

- EdTech vendor

- Analytics consumers (academic depts, upper administration, etc.)

- Security/privacy team

- Basic IT Architecture

- Servers - places to put stuff...cloud/on-premises…whatever, wherever

- Network - low latency

- Applications

- LMS

- SIS

- LRS

- Other stuff

- Databases

- In-Memory, Columnar (e.g., SAP HANA)

- Cloud - AWS Redshift, MS Azure SQL

- Traditional DB Tech (e.g., Oracle, MS-SQL, mySQL, etc)

- Third-party Learning Data Providers

- Check with your LMS and tool providers to find out if they are able to provide you with the learning data they are collecting and will share the learning data. It is highly recommended that you advocate for the learning data provided to follow the Caliper standard. NOTE: There is a difference between conforming to the Caliper standard and being 1EdTech Certified. Please see imscert.org for an up-to-date listing of all products that have achieved 1EdTech conformance certification.

- Resource: http://www.educationdive.com/news/caliper-analytics-advances-next-frontier-for-data/408166/ - Several other edtech vendors mentioned in this article as having “achieve[d] conformance certification for their products.”

- Dashboards and Visualizing Data

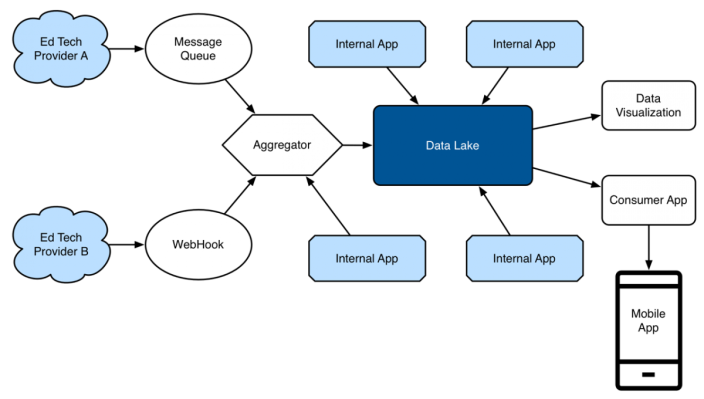

Sample Learning Data Architecture

Messaging Use Cases

University of Kentucky (pdf)

University of California at Berkeley (pdf)

Contributors

We would like to thank the following contributors, 1EdTech Learning Data & Analytics Innovation Leadership Network participants, who lent their personal expertise to develop the document. Their intent was to create a resource that would inform and help facilitate conversations on learning data. The hope is that it will support institutional leaders and other stakeholders as they advance their practices and requirements for leveraging learning data.

Individual contributors: