There is a lot of talk about ChatGPT, its implication for education and the workforce, and whether it is safe to use in terms of data privacy. Recently, Italy banned the chatbot due to privacy concerns, and some consumer groups have also voiced their concerns.

1EdTech’s TrustEd Apps privacy policy rubric can help answer some of these questions so that higher education institutions, K-12 districts, and others can see what protections are and are not available through ChatGPT.

The rubric was developed by 1EdTech members representing different stakeholder groups, including higher education, K-12, and edtech suppliers. By working together, members struck a balance between the realities edtech suppliers face and the information educators need to make informed decisions regarding security.

We do not judge whether a tool is safe or unsafe to use; instead, 1EdTech members designed the rubric to ensure transparency in privacy policies and help educators navigate the complicated and time-consuming process of vetting applications. The rubric provides suppliers a clear understanding of how data will be collected and used. Still, it is up to individual governments, institutions, and districts whether individual policies meet their specific privacy needs.

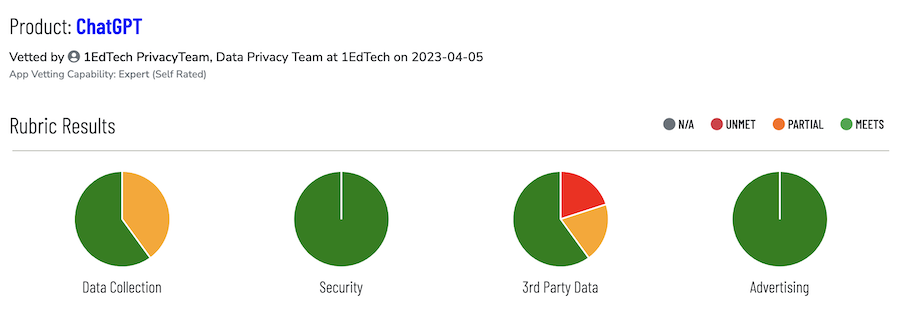

The rubric analyzes privacy policies in the following categories: what data is collected, security measures for the collected data, third-party sharing policies, and advertising. In most areas, ChatGPT meets requirements in spelling out exactly what information it collects and how that data is handled, with a few notable exceptions.

1EdTech’s vetting process of ChatGPT’s privacy policy states that the chatbot will collect personal information for functionality purposes, but it does not specify how long that information will be stored because the policy states storage time will depend on various circumstances.

When it comes to protecting the information, ChatGPT makes a broad statement that it takes steps to protect personal information, but it does not give specifics. In other areas of the policy, however, it states that data is encrypted, it enforces strong password creation, and information is protected through single-sign-on capabilities on Microsoft and Google accounts.

ChatGPT also states it will share information with third parties only for app functionality and legal or business necessities. There does not seem to be an option to opt out of 3rd party data-sharing agreements; however, laws in the EEA and UK require that users be allowed to delete personal information. It is unclear whether users outside those regions may do the same.

What needs some clarification is what other information ChatGPT collects and stores. In one section, the policy states ChatGPT may collect and use all content provided. However, the policy also says the user owns any “input” content.

Regarding the area of advertising, ChatGPT will not display any ads.

Below is a screenshot that summarizes the vetting results of ChatGPT in each of the evaluated areas from 1EdTech’s TrustEd Apps Directory. Green indicates where ChatGPT met the expectations of the privacy policy rubric. Amber shows where it partially met the expectations of the rubric and red means it did not meet the expectations of the rubric.

Update: ChatGPT updated its privacy policy after posting this blog. 1EdTech has since updated its rubric result, displayed below.

1EdTech’s complete vetting of ChatGPT, and more than 9,000 other products, may be found in our TrustEd Apps Directory.

We're hosting several roundtable discussions on the security of ChatGPT and the implications of Generative AI at this year's Learning Impact Conference in Anaheim, California, June 5-8. To see a complete list of sessions and register, click here.

About the Authors

Tim Clark, Ed.D.

As 1EdTech's Vice President of K-12 Programs, Dr. Tim Clark assists schools and districts in adopting 1EdTech standards and practices to enable interoperable and secure digital learning ecosystems. He also provides strategic leadership for K-12 in 1EdTech in collaboration with K-12 institutional and state departments of education members of the consortium.

Kevin Lewis

Kevin Lewis directs and governs 1EdTech's app vetting processes and procedures. As 1EdTech’s data privacy officer, he strategizes the building of app vetting processes using the 1EdTech rubric that comprehensively and consistently mitigates privacy risk and confidentiality breaches of protected information, and he dictates the effectiveness of privacy-related risk mitigation measures. Kevin also coordinates with suppliers to ensure privacy policies are communicated adequately.